November 20, 2025

We're hosting Unsupervised Learning again — a day to explore important recent developments in frontier AI safety. (You will be doing the unsupervised learning, not the models!)

Everything is optional, so drop in and out of sessions as you find useful. We’ll also have plenty of time for 1:1 meetings between people working on similar projects, and space to catch up on work and relax.

This event is for researchers from frontier AI companies and some independent research orgs, but it's invite-only, so please check with us before sharing.

Register here by Friday, November 14 EOD if you haven’t already. Last minute attendees contact isaac@constellation.org

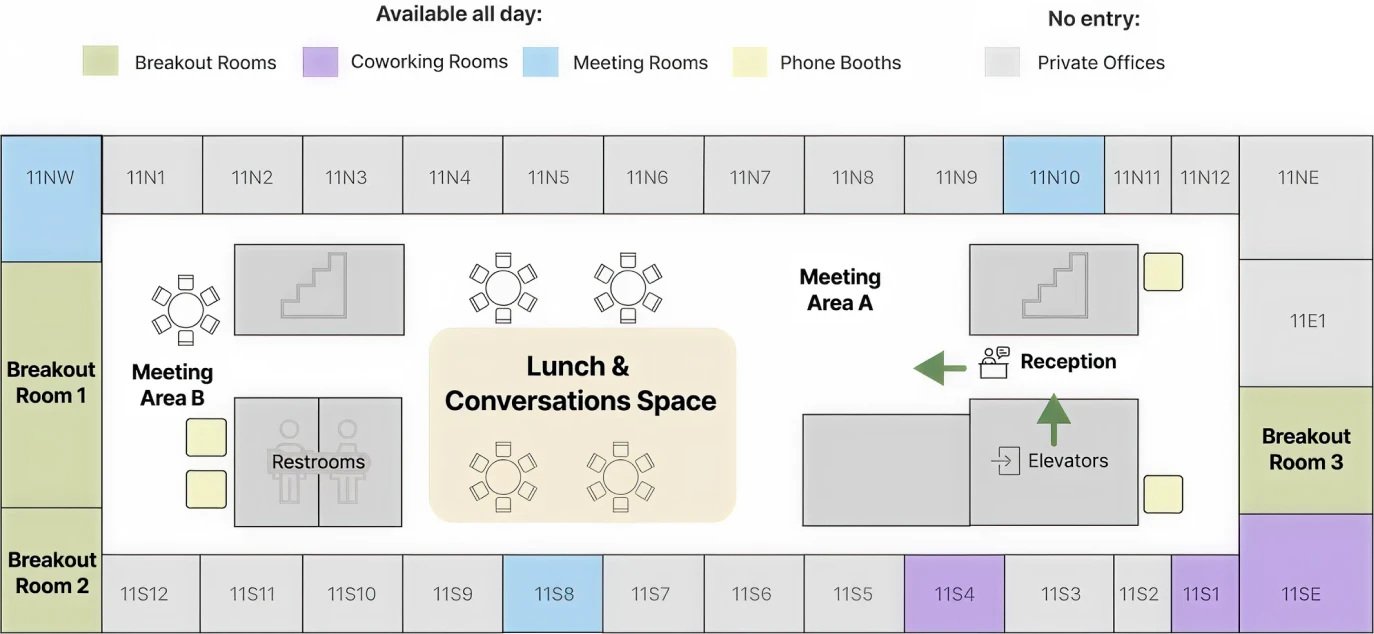

Feel free to use any coworking rooms, meeting rooms, common spaces, and phone booths. Do any activities that fit your schedule.

9:00 AM

Doors open for coworking and 1:1s

11th Floor

9:30 AM

Breakfast: Boichik bagels, Signal coffee, and Elaichi chai!

11th Floor

11:30 AM - 12:30 PM

Lightning talks on the future of misalignment

Lunch Area

Quick-fire presentations from researchers across participating organizations.

12:30 PM

Lunch

Lunch Area

1:30 PM - 2:00 PM

AI resilience

Breakout Room 1

Stress testing deliberative alignment for anti-scheming

Breakout Room 2

Natural misalignment from reward hacking in production RL

Breakout Room 3

2:00 PM - 2:30 PM

Break or 1-1s

2:30 PM - 3:00 PM

Q&A with Sam Bowman

Breakout Room 1

Extrapolating METR's agent time horizon graph

Breakout Room 2

Believe it or not: how deeply do LLMs believe implanted facts?

Breakout Room 3

3:00 PM - 3:30 PM

Break or 1-1s

3:30 PM - 4:00 PM

Q&A with John Schulman

Breakout Room 1

Improving alignment by shaping generalization

Breakout Room 2

Preventing Reward-Compatible Misalignment: Randomisation Games for Reinforcement Learning

Breakout Room 3

4:00 PM - 4:30 PM

Break or 1-1s

4:30 PM - 5:15 PM

A mainline plan for mitigating misalignment risk

Breakout Room 1

What should the spec be for very capable models?

Breakout Room 2

METR's plans for frontier risk assessment

Breakout Room 3

5:15 PM - 5:30 PM

Closing session: what next?

Lunch area

5:30 PM - 6:00 PM

Break or 1-1s

6:00 PM

Breakout Space

Dinner

Lunch area

You're welcome to stay as long as you like for dinner and more conversations.

Sessions will be hosted in Constellation’s workspace in Downtown Berkeley. Meals, snacks, and workspace amenities (workstations, call booths, meeting rooms) available to all attendees.

The space will be open from 9:00 until late for conversations and co-working between sessions. Specialized workspace needs are available upon request (contact Isaac, isaac@constellation.org).

We welcome anyone excited to engage with others working on cutting edge AI Safety and frontier models. We welcome thoughtful critics and different intellectual perspectives. Your questions and challenges help shape productive discussions and encourage ongoing dialogue.

We are an independent, nonprofit research center hosting over 200 individual researchers and organizations working on frontier problems in AI safety. You'll connect with many of our affiliates throughout the event, both as speakers and fellow attendees.

Interested in working from Constellation for a day? Just let isaac@constellation.org know the date you’ll be coming!

Our address is 2150 Shattuck Ave, Berkeley, CA. We are in the Chase Building right by the Downtown Berkeley BART. The entrance is off Shattuck Ave. Buzz yourself up from the lobby to floor 11. We will greet you! Call 1-510-200-8195 with any questions (don’t text).

Park in Downtown Berkeley. We recommend parking in Allston Way Garage (2061 Allson Way, Berkeley, CA, 94704.) You can enter from Allston Way or Center Street. After entering the lot, you can get free parking by using the code “Constellation” with AirGarage. More parking info is here.

We are committed to making this event accessible to all participants. Please contact us regarding accessibility accommodations, unusual dietary restrictions and food allergies, or any other needs to ensure your participation. All our meals cater for people with common allergies or restrictions by default.

Everyone is expected to be considerate, professional, and collaborative. We also expect people to follow the rules of their organizations. Here is our full Code of Conduct.

There is no specific confidentiality policy. Respect the intentions of the people you speak to, as normal – check in before you share something widely if it seemed to be shared in a more confidential context.

For questions, accommodation requests, or additional information: